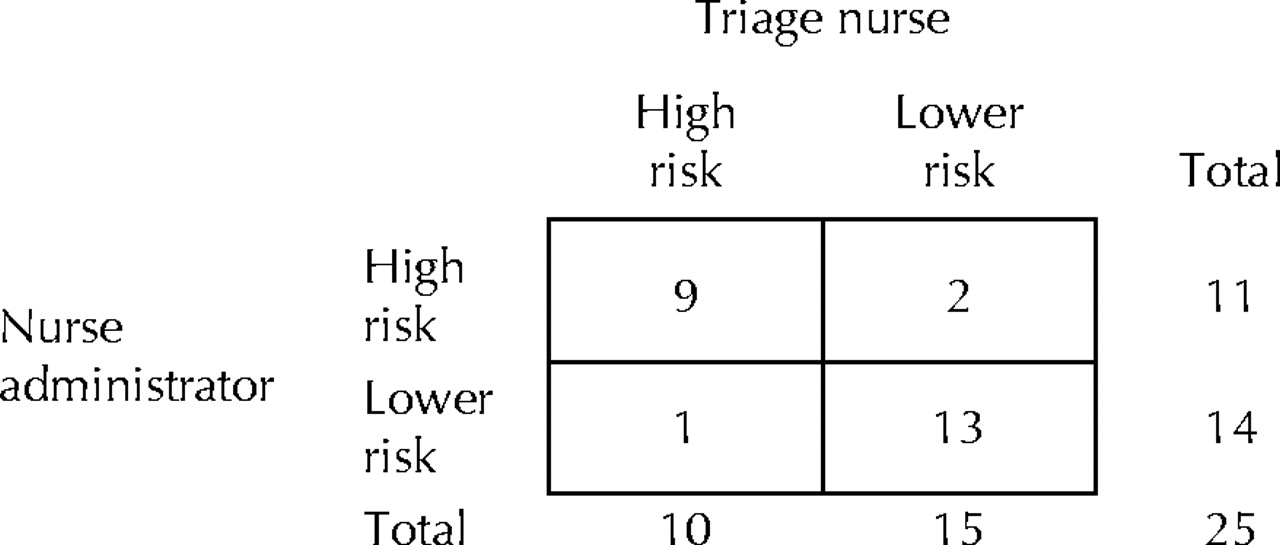

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/3-Table1-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

PDF) Sequentially Determined Measures of Interobserver Agreement (Kappa) in Clinical Trials May Vary Independent of Changes in Observer Performance

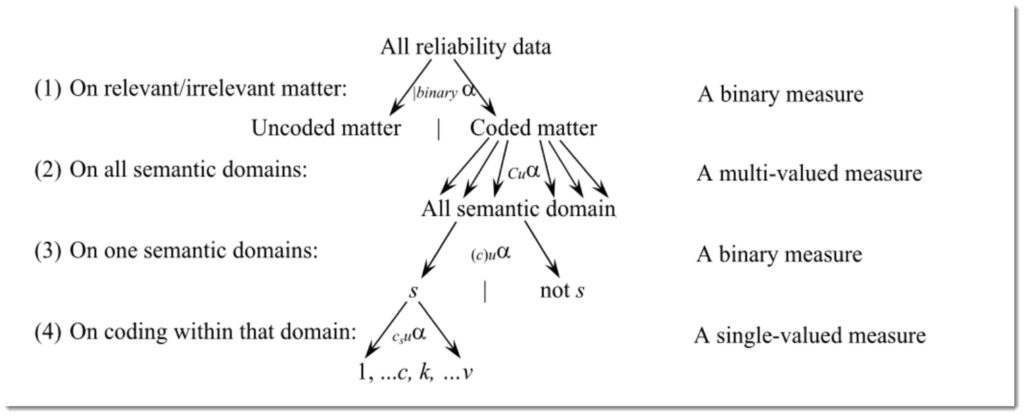

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/4-Table3-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

Pitfalls in the use of kappa when interpreting agreement between multiple raters in reliability studies

PDF) The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements Perspective | mitz ser - Academia.edu

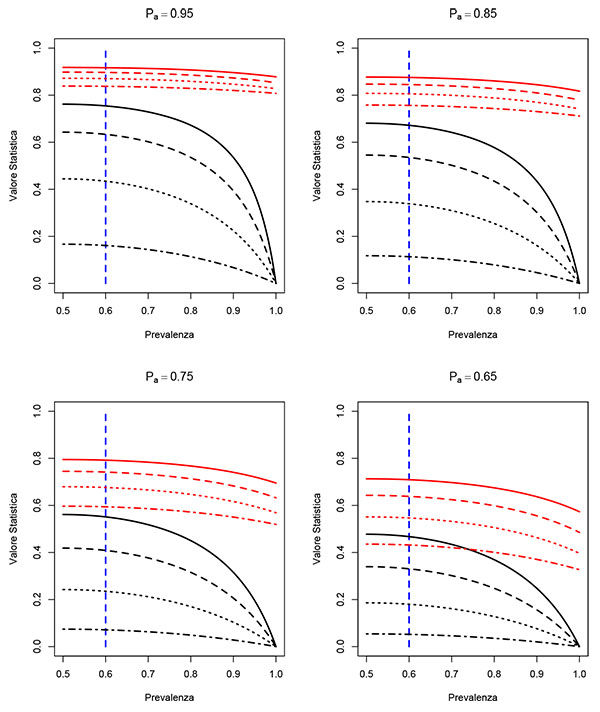

The comparison of kappa and PABAK with changes of the prevalence of the... | Download Scientific Diagram

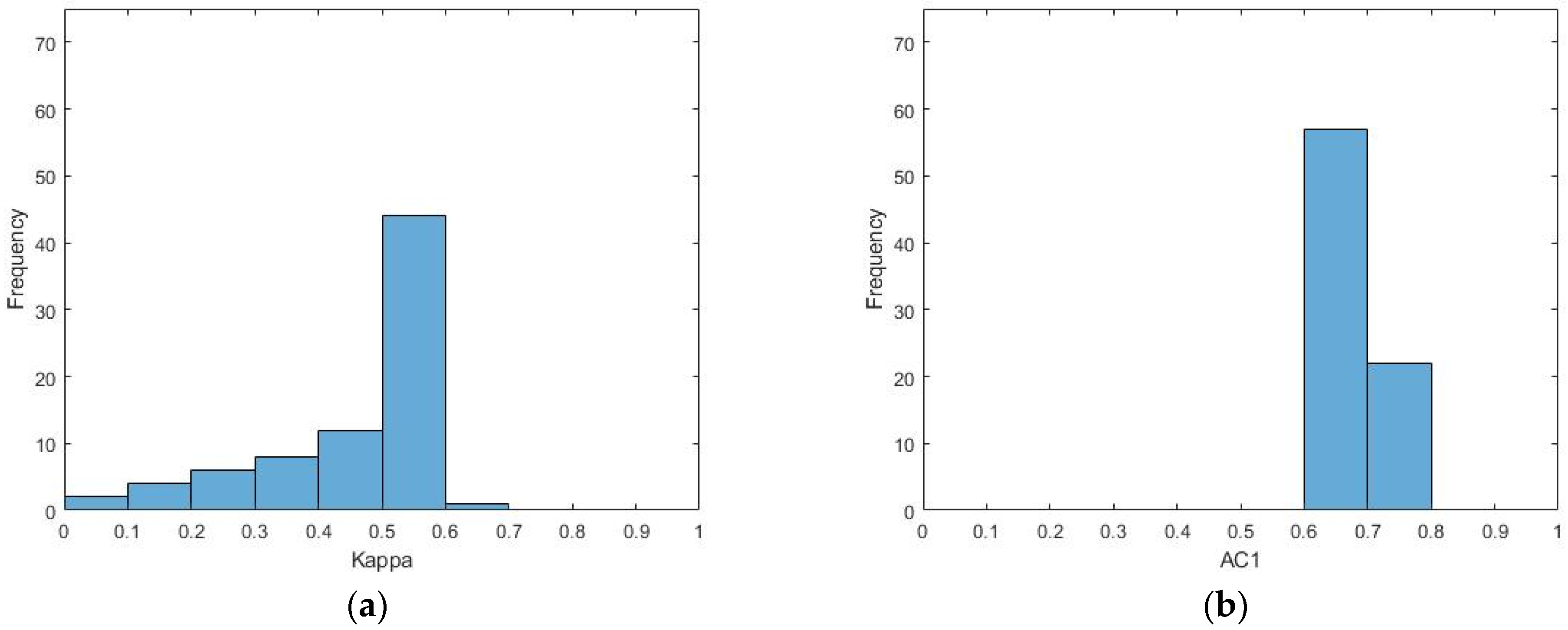

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

free-marginal multirater/multicategories agreement indexes and the K categories PABAK - Cross Validated

Comparing dependent kappa coefficients obtained on multilevel data - Vanbelle - 2017 - Biometrical Journal - Wiley Online Library

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/2-Figure2-1.png)